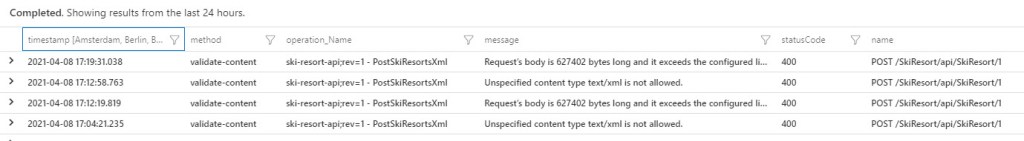

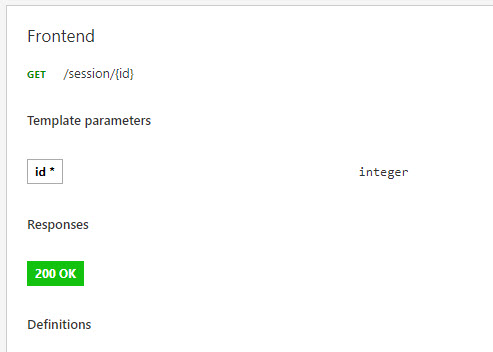

I had a talk at Integrate 2022 in London about Lifecycle of Logic Apps Standard. I thought it would be a good idea to write down some key takeaways as blog posts. Today(June 2022), handling managed connections while developing Logic Apps standard in Visual Studio Code is not straight forward. The Visual Studio Code extension for Logic Apps Standard expect you to create the connections from within the editor, this is not ideal as more and more we strive towards creating our Azure resources with code (ARM|Bicep|Terraform), additionally you cannot use existing connections. Another problem is that when the connection is created a token (connectionKey) is added to the local.settings.json file and valid for only 7 days. If you want to work with a Logic App longer than 7 days or have a peer to continue work the connections need to be recreated. The main idea to solve these problems is to create Managed connections with Bicep and save the connectionKeys in a Key Vault. When you have the connectionKeys saved in Key vault your App settings can reference the secret in Key Vault. The image below shows steps and references.

Saving the connection key

I prefer to create my resources with Bicep, that said what I describe here can be achieved with ARM and most likely Terraform(not tested) also. To create a connection resource you will use the Microsoft.Web/connections resource type. I will not dive into creating the connections here, the documentation for that can be found here.

Note I have written a script to help you generate bicep for the connections, it’s not bullet proof but saves time to get the main parts in place. You find the script here.

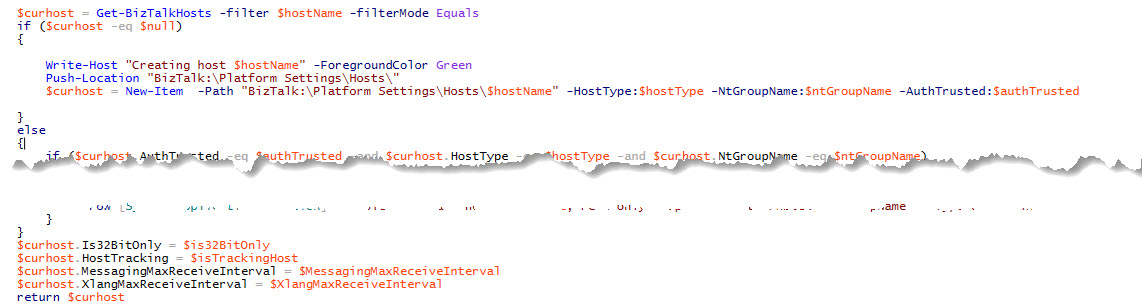

One of the things the script does is add to the generated Bicep an additional section to save the connectionKey to Key Vault if it is a lab or dev environment. Below you can see the code to get and save the connectionKey. Each time you deploy a new version of the secret will be created with a new connectionKey that will be valid the number of days you set validityTimeSpan variable.

// Handle connectionKey

param baseTime string = utcNow('u')

var validityTimeSpan = {

validityTimeSpan: '30'

}

var validTo = dateTimeAdd(baseTime, 'P${validityTimeSpan.validityTimeSpan}D')

var key = environment == 'lab' || environment == 'dev' ? connection_resource.listConnectionKeys('2018-07-01-preview', validityTimeSpan).connectionKey : 'Skipped'

resource kv 'Microsoft.KeyVault/vaults@2021-11-01-preview' existing = {

name: kv_name

}

resource kvConnectionKey 'Microsoft.KeyVault/vaults/secrets@2021-11-01-preview' = if (environment == 'lab' || environment == 'dev') {

parent: kv

name: '${DisplayName}-connectionKey'

properties: {

value: key

attributes: {

exp: dateTimeToEpoch(validTo)

}

}

}

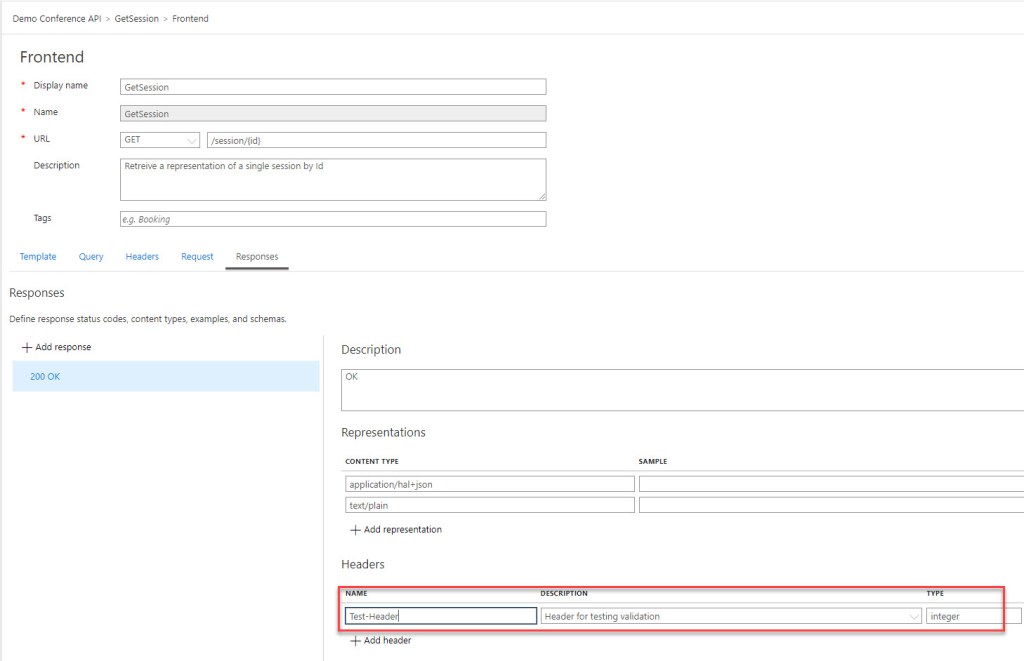

Using existing connections in Visual Studio Code

To use existing connections in Visual Studio Code you must write the managedApiConnections element in the connections.json file. As you can see in the example below the parameter element has a reference to an application setting.

{

"managedApiConnections": {

"bingmaps": {

"api": {

"id": "/subscriptions/---your subscription---/providers/Microsoft.Web/locations/westeurope/managedApis/bingmaps"

},

"authentication": {

"type": "Raw",

"scheme": "Key",

"parameter": "@appsetting('bingmaps-connectionKey')"

},

"connection": {

"id": "/subscriptions/---your subscription---/resourcegroups/---your resource group----/providers/microsoft.web/connections/bingmaps"

},

"connectionRuntimeUrl": "https://3b829fe9f0975a92.14.common.logic-westeurope.azure-apihub.net/apim/bingmaps/788bd6f6ab024898a829cb4e9b463d1d"

}

}

}

Locally the application setting is in your local.settings.json file that should not be saved to source control as it will contain secrets. In the example below you see the bingmaps-connectionKey element referring the connectionKey we saved in key vault.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "node",

"WORKFLOWS_TENANT_ID": "---your tenant id---",

"WORKFLOWS_SUBSCRIPTION_ID": "---your subscription---",

"WORKFLOWS_RESOURCE_GROUP_NAME": "---your resource group---",

"WORKFLOWS_LOCATION_NAME": "---your region---",

"WORKFLOWS_MANAGEMENT_BASE_URI": "https://management.azure.com/",

"bingmaps-connectionKey": "@Microsoft.KeyVault(VaultName=---your key vault---;SecretName=bingmaps-connectionKey)"

}

With these steps taken you should be able to use the existing connection.

Helper script

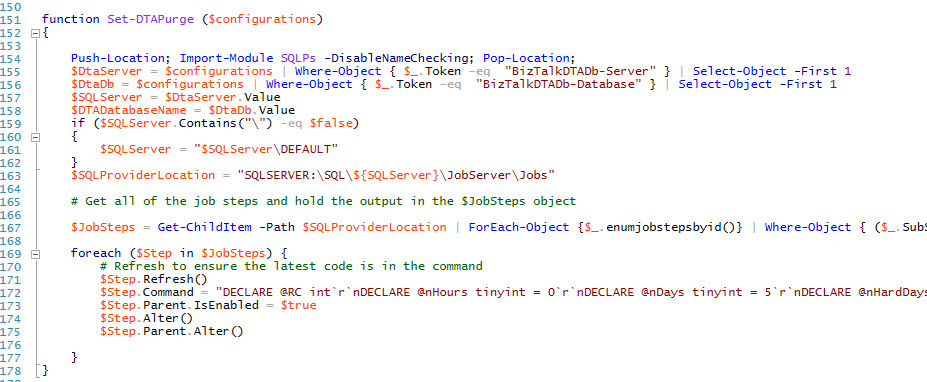

I have written a script to extract all connections in a resource group, you can find it here. Get-UpdatedManagedConnectionFiles.ps1 will generate files containing Connection information based on the connections in a provided resource group to use in Visual Studio Code.

Assumes you have stored the connectionKeys in KeyVault.

The script calls Generate-ConnectionsRaw.ps1 and Generate-Connections.ps1 and saves the files in a folder you provide. See the table below for details on the saved files.

| File | Description |

|---|---|

| .connections.az.json | Shows how the managed connections should look in should look in the deployment. |

| .connections.code.json | Shows how the managed connections should look in Visual Studio Code. Copy the contents of the managedApiConnections element to your connections.json |

| .connectionKeys.txt | Lines you can use in your local.settings.json to match the connection information created in .connections.code.json. Here we have to connectionKeys as when created the normal way in VS Code. |

| .KvReference.txt | Lines you can in your local.settings.json to match the connection information created in .connections.code.json. Here we use Key vault references instead which is the best solution as you don’t need to update the local.settings.json when the keys are updated. |

Conclusion

It is possible to workaround the current limitations handling managed connections when working with Logic Apps Standard in Visual Studio Code. Hope you find this handy.

You can download source code from the GiHub repo

You can download source code from the GiHub repo